Clickbait blog post titles. Gotta love ’em. And this post doesn’t mention jank. You have been lied to!

What is Houdini?

Have you ever thought about the amount of work CSS does? You change a single attribute and suddenly your entire website appears in a different layout. It’s kind of magic in that regard (can you tell where I am going with this?!). So far, we – the community of web developers – have only been able to witness and observe the magic. What if we want to come up with our own magic tricks and perform them? What if we want to become the magician? Enter Houdini – we gave the magician a name so we can talk to him and make him do things the way we want… I’ll stop with the metaphor here before things get out of hand 🎩🌟🐇

The Houdini task force consists of engineers from Mozilla, Apple, Opera, Microsoft, HP, Intel and Google working together to expose certain parts of the CSS engine to the web developer. The task force is working on a collection of drafts with the goal to get them accepted by the W3C to become actual web standards. They set themselves a few high-level goals, turned them into specification drafts which in turn gave birth to a set of supporting, lower-level specification drafts. The combination of these drafts is what is usually meant when someone is talking about “Houdini”. At the time of writing, the list of drafts is still incomplete and most of the drafts are mere placeholders. That’s how early in development of Houdini we are. There is no bleeding edge to cut yourself on, yet.

Disclaimer: I want to give a quick overview of the Houdini drafts so you have an idea of what kind of problems Houdini tries to tackle. As far as the current state of the specs allow, I’ll try to give code examples, as well. Keeping that in mind, please be aware that all of these specs are drafts and very volatile. There’s no guarantee that these code samples are even remotely correct in the future or that any of these drafts become reality at all.

The specifications

Worklets (spec)

Worklets by themselves are not really useful. They are a concept introduced to make many of the later drafts possible. If you thought of Web Workers when you read “worklet”, you are not wrong. They have a lot of conceptual overlap. So why a new thing when we already have workers? Houdini’s goal is to expose new APIs to allow web developers to hook up their own code into the CSS engine and the surrounding systems. It’s probably not unrealistic to assume that some of these code fragments will have to be run every. single. frame. Some of them have to by definition. Quoting the Web Worker spec:

Workers [...] are relatively heavy-weight, and are not intended to be used in large numbers. For example, it would be inappropriate to launch one worker for each pixel of a four megapixel image.

That means web workers are not viable for the things Houdini plans to do. Therefore, worklets were invented. Worklets make use of ES2015 classes as nice way to define a collection of methods, the signature of which are predefined by the type of the worklet. They are light-weight, short-lived and their methods are expected to be strictly idempotent. This is needed as not even the order of execution can be guaranteed to be consistent between different browsers. To catch non-idempotent implementations early, the browser is allowed (and encouraged) to spawn 2 worklets and dispatch calls randomly between the 2 instances, effectively making shared state between subsequent calls impossible.

Paint Worklet (spec)

I am starting with this as it is introduces the fewest new concepts. From the spec draft itself:

The paint stage of CSS is responsible for painting the background, content and highlight of an element based on that element’s geometry (as generated by the layout stage) and computed style.

This allows you not only to define how an element should draw itself (think of the synergy with Web Components!) but also alter the visuals of existing elements. No need for hacky things like DOM elements to create a ripple effect on buttons. Another big advantage of running your code at paint time of an element compared to using a regular canvas is that you will know the size of the element you are supposed to paint and that you will be aware of fragments and handle them appropriately.

Wait, what are fragments?

Intermezzo – Fragments

I think of elements in the DOM tree as boxes that are laid out by the CSS engine to make up my website. It turns out, however, that this mental model breaks down once inline elements come into play. A <span> may need to be wrapped; so while still technically being a single DOM node, it has been fragmented into 2, well, fragments. The spec calls the bounding box of these 2 fragments a fragmentainer. I am not even kidding.

Back to the Paint Worklet: Effectively, your code will get called for each fragment and will be given access to a stripped down canvas-like API as well as the styles applied to the element, which allows you to draw (but not visually inspect) the fragment. You can even request an “overflow” margin to allow you to draw effects around the element’s boundaries, just like box-shadow.

class {

static get inputProperties() {

return ['border-color', 'border-size'];

}

paint(ctx, geom, inputProperties) {

var offset = inputProperties['border-size']

var colors = inputProperties['border-color'];

self.drawFadingEdge(

ctx,

0-offset[0], 0-offset[0],

geom.width+offset[0], 0-offset[0],

color[0]);

self.drawFadingEdge(

ctx,

geom.width+offset[1], 0-offset[1],

geom.width+offset[1], geom.height+offset[1],

color[1]);

self.drawFadingEdge(

ctx, 0-offset[2],

geom.height+offset[2], geom.width+offset[2],

geom.height+offset[2],

color[2]);

self.drawFadingEdge(

ctx,

0-offset[3], 0-offset[3],

0-offset[3], geom.height+offset[3],

color[3]);

}

drawFadingEdge(ctx, x0, y0, x1, y1, color) {

var gradient =

ctx.createLinearGradient(x0, y0, x1, y1);

gradient.addColorStop(0, color);

var colorCopy = new ColorValue(color);

colorCopy.opacity = 0;

gradient.addColorStop(0.5, colorCopy);

gradient.addColorStop(1, color);

}

overflow(inputProperties) {

// Taking a wild guess here. The return type

// of overflow() is currently specified

// as `void`, lol.

return {

top: inputProperties['border-size'][0],

right: inputProperties['border-size'][1],

bottom: inputProperties['border-size'][2],

left: inputProperties['border-size'][3],

};

}

};

Compositor Worklet

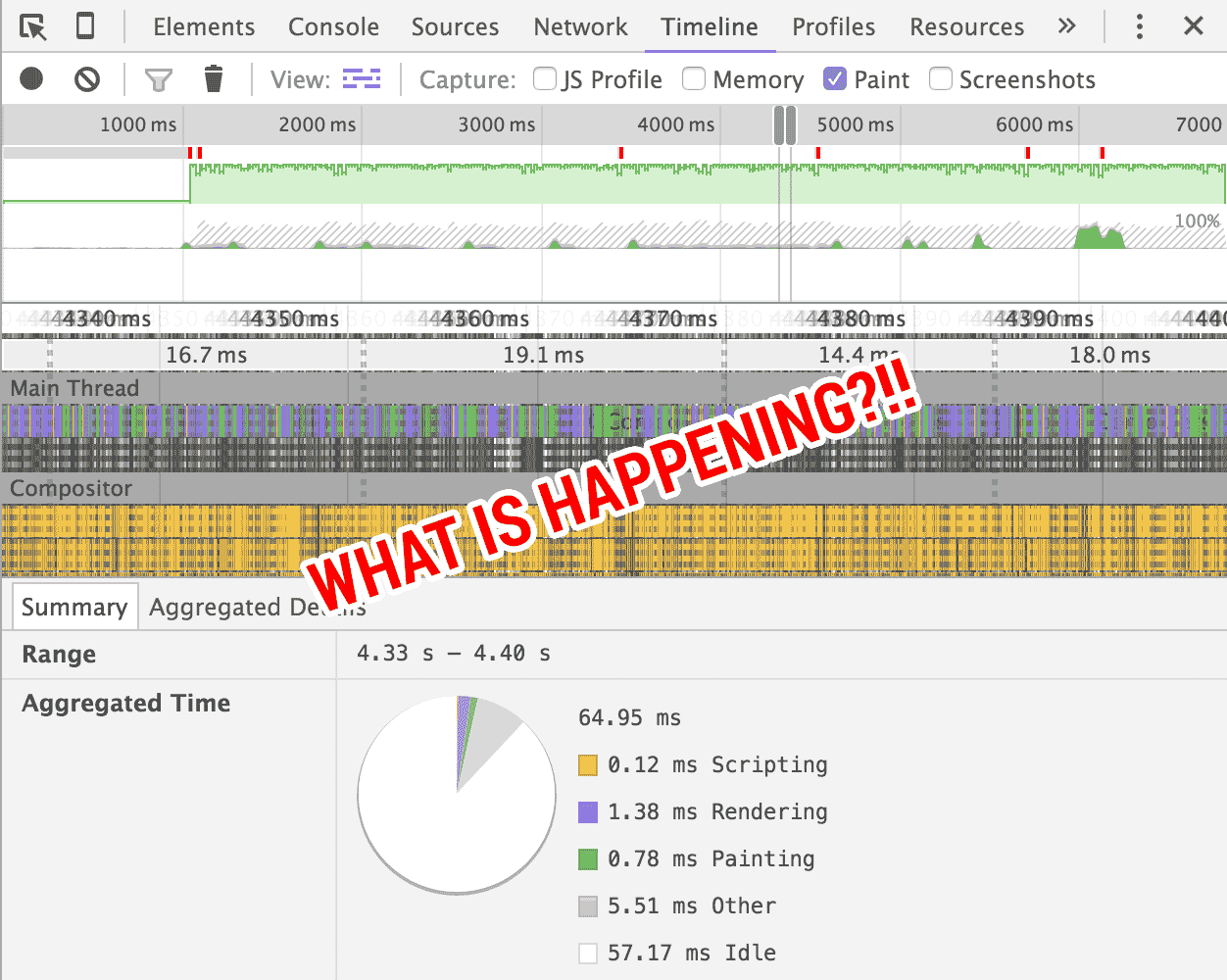

At the time of writing, the compositor worklet doesn’t even have a proper draft and yet it’s the one that gets me excited the most. As you might know, some operations are outsourced to the graphics card of your computer by the CSS engine, although that is dependent on both your graphics card and your device in general. A browser usually takes the DOM tree and, based on specific criteria, decides to give some branches and subtrees their own layer.These subtrees paint themselves onto it (maybe using a paint worklet). As a final step, all these individual, now painted, layers are stacked and positioned on top of each other, respecting z-indices, 3D transforms and such, to yield the final image that is visible on your screen. This process is called “compositing” and is executed by the “compositor”. The advantage of this process is that you don’t have to make all the elements repaint themselves when you scrolled a tiny bit. Instead, you can reuse the layers from the previous frame and just re-run the compositor with the changed scroll position. This makes things fast. This makes us reach 60fps. This makes Paul Lewis happy.

As the name suggests, the compositor worklet lets you hook into the compositor and influence the way an element’s layer, which has already been painted, is positioned and layered on top of the other layers. To get a little more specific: You can tell the browser that you want to hook into the compositing process for a certain DOM node and can request access to certain attributes like scroll position, transform or opacity. This will force this element on its own layer and on each frame your code gets called. You can move your layer around by manipulating the layers transform and change its attributes (like opacity) allowing you to do fancy-schmancy things at a whopping 60 fps. Here’s a full implementation for parallax scrolling using the compositor worklet.

// main.js

var worklet = new CompositorWorker('worklet.js');

var scrollerProxy =

new CompositorProxy($('#scroller'), ['scrollTop']);

var parallaxProxy =

new CompositorProxy($('#image'), ['transform']);

worklet.postMessage([scrollerProxy, parallaxProxy]);

// worklet.js

function tick(timestamp) {

var t = self.parallax.transform;

t.m42 = -0.1 * self.scroller.scrollTop;

self.parallax.transform = t;

self.requestAnimationFrame(tick);

}

self.onmessage = function(e) {

self.scroller = e.data[0];

self.parallax = e.data[1];

self.requestAnimationFrame(tick);

};

Layout Worklet (spec)

Again, a specification that is far from finished, but the concept is intriguing: Write your own layout! The layout worklet is supposed to enable you to do display: layout('myLayout') and run your JavaScript to arrange a node’s children in the node’s box . Of course, running a full JavaScript implementation of CSS’s flex-box layout will be slower than running an equivalent native implementation – but it’s easy to imagine a scenario where cutting corners can yield a performance gain. Imagine a website consisting of nothing but tiles á la Windows 10 or a Masonry-style layout. Absolute/fixed positioning is not used, neither is z-index nor do elements ever overlap or have any kind of border or overflow. Being able to skip all these checks on re-layout could yield a performance gain.

registerLayout('random-layout', class {

static get inputProperties() {

return [];

}

static get childrenInputProperties() {

return [];

}

layout(children, constraintSpace, styleMap) {

Const width = constraintSpace.width;

Const height =constraintSpace.height;

for (let child of children) {

const x = Math.random()*width;

const y = Math.random()*height;

const constraintSubSpace = new ConstraintSpace();

constraintSubSpace.width = width-x;

constraintSubSpace.height = height-y;

const childFragment = child.doLayout(constraintSubSpace);

childFragment.x = x;

childFragment.y = y;

}

return {

minContent: 0,

maxContent: 0,

width: width,

height: height,

fragments: [],

unPositionedChildren: [],

breakToken: null

};

}

});

Typed CSSOM (spec)

That’s enough worklets for now, let’s get back to some good ol’ APIs. Typed CSSOM (CSS Object Model (Cascading Style Sheets Object Model)) addresses a problem we probably all have encountered and just learned to just put up with. Let me illustrate with a line of JavaScript:

$('#someDiv').style.height = getRandomInt() + 'px';

We are doing math, converting a number to a string to append a unit just to have the browser parse that string and convert it back to a number for the CSS engine to use. This gets even uglier when you manipulate transforms with JavaScript. No more! CSS is about to get some typing, yo! This draft is one of the more mature ones and a polyfill is actually already being worked on (Disclaimer: Using the polyfill will obviously add even more computational overhead. The point is to show how convenient the API is).

Instead of strings you will be working on an element’s StylePropertyMap, where each CSS attribute has it’s own key and corresponding value type. Attributes like width have LengthValue as their value type. A LengthValue is a dictionary of all CSS units like em, rem, px, percent, etc. Setting height: calc(5px + 5%) would yield a LengthValue{px: 5, percent: 5}. Some properties like box-sizing just accept certain keywords and therefore have a KeywordValue. The validity of those attributes could now be checked at runtime.

var w1 = $('#div1').styleMap.get('width');

var w2 = $('#div2').styleMap.get('width');

$('#div3').styleMap.set('background-size',

[new SimpleLength(200, 'px'), w1.add(w2)])

$('#div4')).styleMap.get('margin-left')

// => {em: 5, percent: 50}

Font Metrics

Font metrics is exactly what it sounds like. What is the bounding box (or the bounding boxes when we are wrapping) when I render string X with font Y at size Z? What if I go all crazy unicode on you like using ruby annotations? This has been requested a lot in the past and Houdini should finally make these wishes come true.

Properties and Values (spec)

Do you know CSS Custom Properties (or their unofficial alias “CSS Variables”)? This is them but with types! So far, variables could only have string values and used a simple search-and-replace approach. This draft would allow you to not only specify a type for your variables, but also define a default value and influence the inheritance behavior using a JavaScript API. Technically, this would also allow custom properties to get animated with standard CSS transitions and animations, which is being considered.

["--scale-x", "--scale-y"].forEach(function(name) {

document.registerProperty({

name: name,

syntax: "<number>",

inherits: false,

initialValue: "1"

});

});

But wait, there’s more!

There’s even more specs in Houdini’s list of drafts, but the future of those is rather uncertain and they are not much more than a placeholder for an idea at this point. Examples include custom overflow behaviors, CSS syntax extension API, extension of native scroll behavior and similar ambitious things that allow you to screw up your web app even worse than ever before. Rejoice!

Gimme!

I had the privilege to play around with a “frankenstein build”, that had hacky and spec-incompatible implementations of some of the Houdini drafts. It was a lot of fun seeing my tab randomly crash because I dared to hover over DevTools. Everything is very much in flux so actually making engineers spend their time on implementing the drafts over polishing them is probably not a good idea.

However, the first of these specs may be available behind a “OMG this is totally not for production” flag soon(tm) in Canary.

For what it’s worth, I have open-sourced the code for the demo videos I made so you can get a feeling on what working with worklets (put that on a shirt!) feels like.

If you want to get involved, there’s the Houdini mailing list. Feel free to hit me up on Twitter if you have any questions.